Abstract

Recent advancements in large vision-language models enabled visual object detection in open-vocabulary scenarios, where object classes are defined in free-text formats during inference.

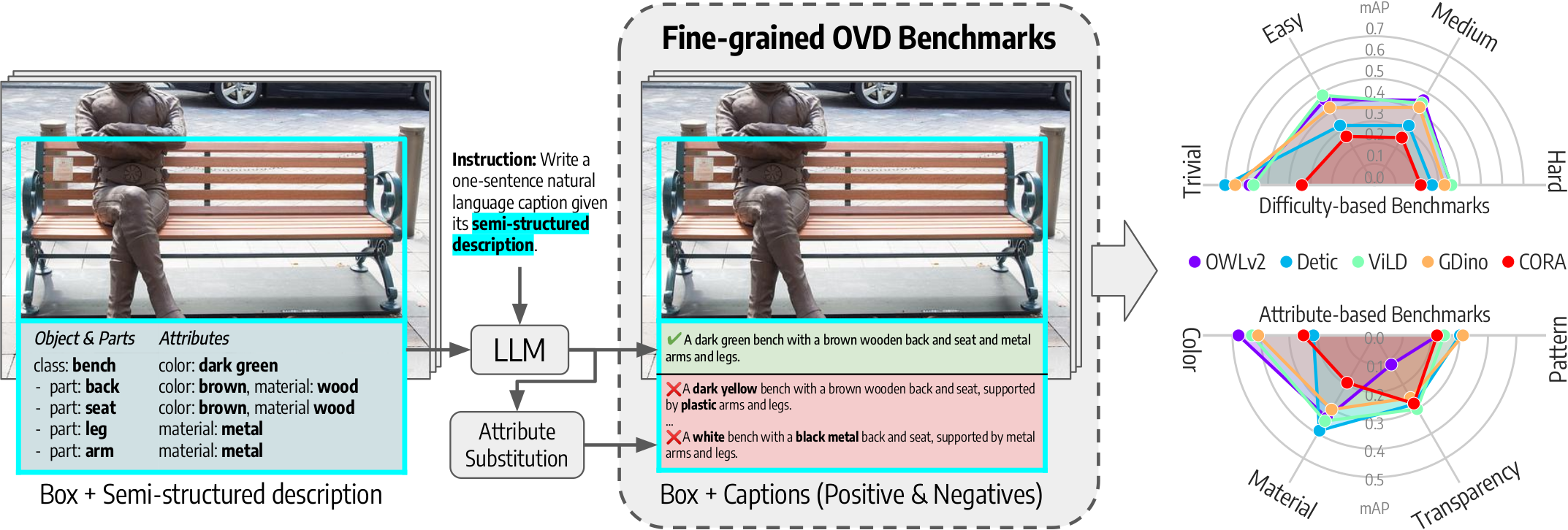

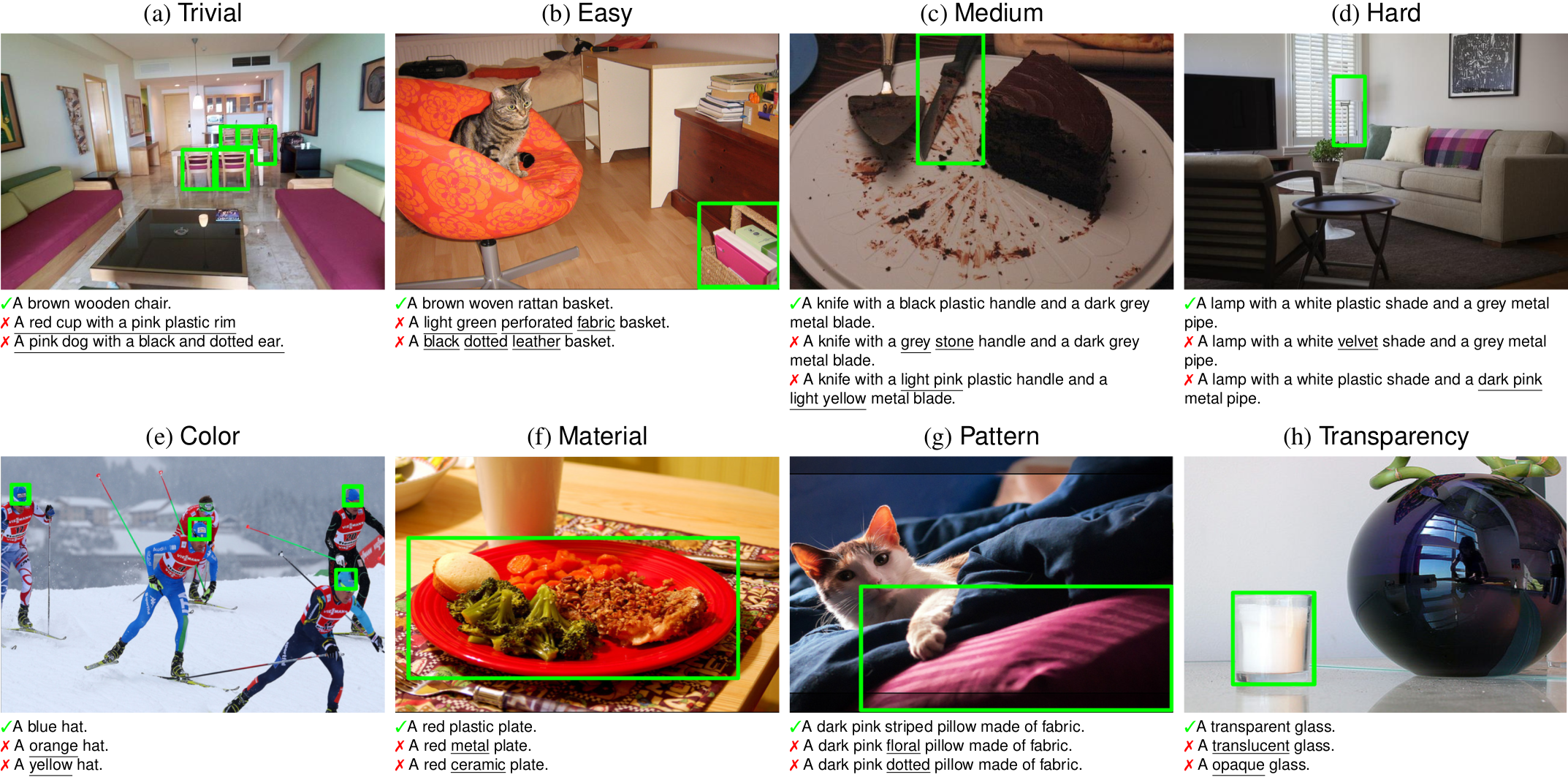

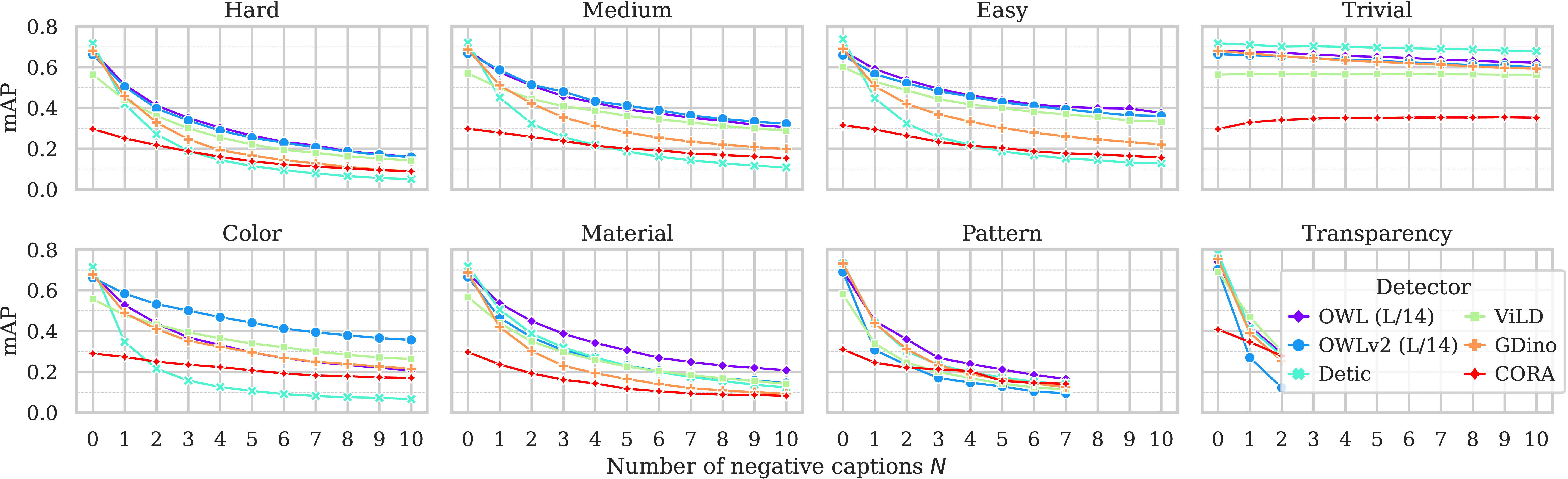

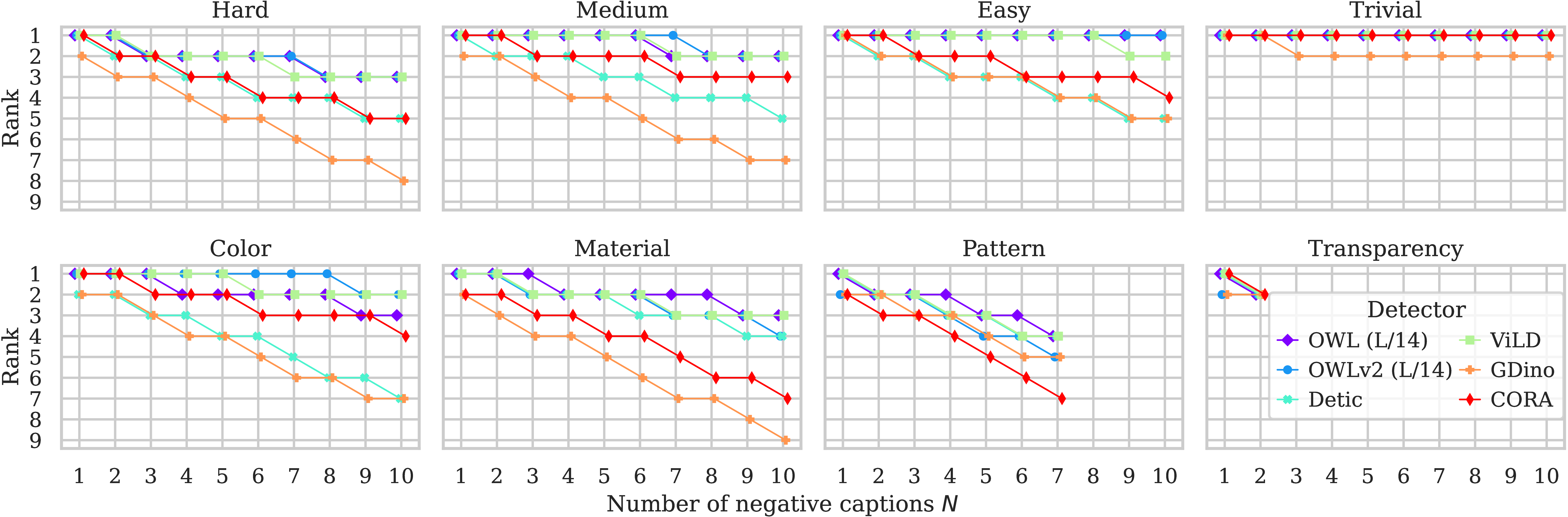

In this paper, we aim to probe the state-of-the-art methods for open-vocabulary object detection to determine to what extent they understand fine-grained properties of objects and their parts. To this end, we introduce an evaluation protocol based on dynamic vocabulary generation to test whether models detect, discern, and assign the correct fine-grained description to objects in the presence of hard-negative classes. We contribute with a benchmark suite of increasing difficulty and probing different properties like color, pattern, and material. We further enhance our investigation by evaluating several state-of-the-art open-vocabulary object detectors using the proposed protocol and find that most existing solutions, which shine in standard open-vocabulary benchmarks, struggle to accurately capture and distinguish finer object details.

We conclude the paper by highlighting the limitations of current methodologies and exploring promising research directions to overcome the discovered drawbacks.

This work has received financial support by the European Union — Next Generation EU, Mission 4 Component 1

CUP B53D23026090001 (a MUltimedia platform for Content Enrichment and Search in audiovisual archives — MUCES PRIN 2022 PNRR P2022BW7CW).

This work has received financial support by the European Union — Next Generation EU, Mission 4 Component 1

CUP B53D23026090001 (a MUltimedia platform for Content Enrichment and Search in audiovisual archives — MUCES PRIN 2022 PNRR P2022BW7CW).